综述七:CNN模型

Here’s a list of major CNN (Convolutional Neural Network) variants ordered by the time of their publication. CNNs have evolved over the years to improve accuracy, reduce computational costs, and adapt to different domains.

1. LeNet (1998)

- Key Idea: One of the first CNN architectures, designed for handwritten digit recognition (MNIST). It used convolutional layers followed by pooling and fully connected layers.

- Application: Digit classification.

- Notable Paper: Yann LeCun et al., “Gradient-Based Learning Applied to Document Recognition”, 1998.

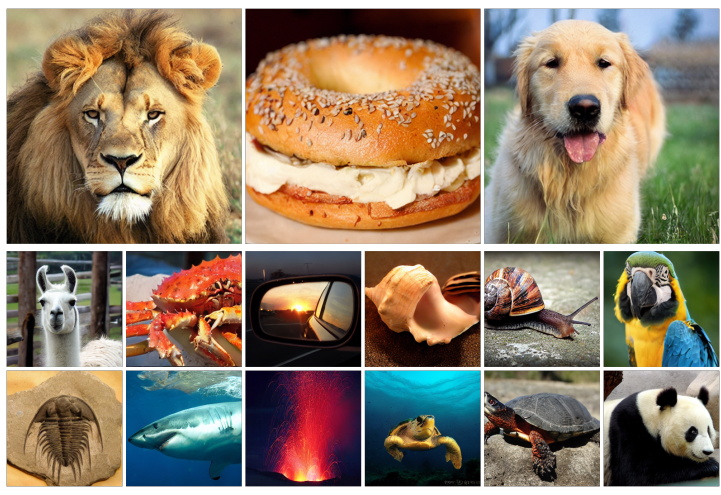

2. AlexNet (2012)

- Key Idea: A deep CNN with 8 layers (5 convolutional and 3 fully connected layers), which demonstrated the power of CNNs by winning the 2012 ImageNet competition. It introduced ReLU activation functions and used GPU acceleration.

- Application: Image classification (ImageNet).

- Notable Paper: Alex Krizhevsky et al., “ImageNet Classification with Deep Convolutional Neural Networks”, 2012.

3. ZfNet (2013)

- Key Idea: Improved upon AlexNet by reducing the size of the kernel and adding extra layers. It also used a deeper network with more filters.

- Application: Image classification (ImageNet).

- Notable Paper: Matthew Zeiler & Rob Fergus, “Visualizing and Understanding Convolutional Networks”, 2013.

4. VGGNet (2014)

- Key Idea: Introduced the use of very small (3x3) convolution filters and deep architectures (16–19 layers). Focused on using uniformity in the architecture, with consistent filter sizes.

- Application: Image classification (ImageNet).

- Notable Paper: Simonyan & Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition”, 2014.

5. GoogleNet (Inception v1) (2014)

- Key Idea: Used an inception module that allows the network to learn different kernel sizes simultaneously. Also introduced global average pooling to reduce overfitting.

- Application: Image classification (ImageNet).

- Notable Paper: Christian Szegedy et al., “Going Deeper with Convolutions”, 2014.

6. ResNet (2015)

- Key Idea: Introduced residual connections (skip connections) that allow the model to learn residuals instead of direct mappings. This solved the vanishing gradient problem and allowed for much deeper networks (up to 152 layers).

- Application: Image classification, object detection.

- Notable Paper: Kaiming He et al., “Deep Residual Learning for Image Recognition”, 2015.

7. Inception v2 and v3 (2015-2016)

- Key Idea: Further refinements to the original Inception network, including factorization ideas to reduce the number of parameters, and improvements in training techniques (e.g., batch normalization).

- Application: Image classification.

- Notable Paper: Christian Szegedy et al., “Rethinking the Inception Architecture for Computer Vision”, 2015.

8. DenseNet (2016)

- Key Idea: Introduced dense connections where each layer is connected to every other layer in a feed-forward fashion, improving gradient flow and encouraging feature reuse.

- Application: Image classification, segmentation.

- Notable Paper: Gao Huang et al., “Densely Connected Convolutional Networks”, 2016.

9. Xception (2017)

- Key Idea: Extreme version of Inception, which replaces standard convolutions with depthwise separable convolutions, improving efficiency while maintaining performance.

- Application: Image classification.

- Notable Paper: François Chollet, “Xception: Deep Learning with Depthwise Separable Convolutions”, 2017.

10. MobileNet (2017)

- Key Idea: Designed for mobile and embedded devices, MobileNet uses depthwise separable convolutions to significantly reduce the number of parameters while maintaining performance.

- Application: Mobile and embedded vision tasks.

- Notable Paper: Andrew G. Howard et al., “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications”, 2017.

11. U-Net (2015)

- Key Idea: A CNN architecture specifically designed for image segmentation tasks. U-Net uses a symmetric encoder-decoder structure with skip connections to better retain spatial information.

- Application: Medical image segmentation.

- Notable Paper: Olaf Ronneberger et al., “U-Net: Convolutional Networks for Biomedical Image Segmentation”, 2015.

12. Mask R-CNN (2017)

- Key Idea: An extension of Faster R-CNN for instance segmentation, Mask R-CNN adds a branch for predicting segmentation masks alongside object detection.

- Application: Object detection and segmentation.

- Notable Paper: Kaiming He et al., “Mask R-CNN”, 2017.

13. EfficientNet (2019)

- Key Idea: Introduced a systematic way to scale CNNs by balancing network depth, width, and resolution. EfficientNet achieves state-of-the-art accuracy with fewer parameters.

- Application: Image classification.

- Notable Paper: Mingxing Tan & Quoc V. Le, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, 2019.

14. RegNet (2020)

- Key Idea: Introduced a simple design space for designing networks, RegNet emphasizes scalability and simplicity, resulting in models with better performance and efficiency.

- Application: Image classification, detection tasks.

- Notable Paper: Radoslav M. K. et al., “RegNet: A Family of Efficient and Scalable Convolutional Networks”, 2020.

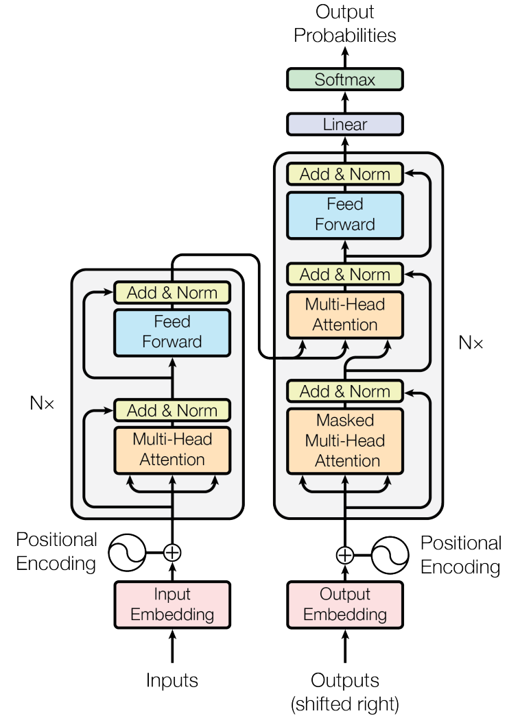

15. Vision Transformers (ViT) (2020)

- Key Idea: Introduced the transformer architecture to vision tasks, showing that transformers can outperform CNNs when trained on large datasets. ViT divides images into patches and treats them as sequences.

- Application: Image classification.

- Notable Paper: Alexey Dosovitskiy et al., “Image Transformer”, 2020.

Summary of CNN Variants:

- LeNet (1998)

- AlexNet (2012)

- ZfNet (2013)

- VGGNet (2014)

- GoogleNet (Inception v1) (2014)

- ResNet (2015)

- Inception v2/v3 (2015-2016)

- DenseNet (2016)

- Xception (2017)

- MobileNet (2017)

- U-Net (2015)

- Mask R-CNN (2017)

- EfficientNet (2019)

- RegNet (2020)

- Vision Transformers (ViT) (2020)

These CNN variants reflect the continuous advancements in architecture design, from the early simple models like LeNet to more sophisticated networks like EfficientNet and Vision Transformers. Each variant introduced innovations that addressed specific limitations of earlier models, leading to improvements in accuracy, efficiency, and the ability to scale to larger datasets and more complex tasks.

模型

An Introduction to Convolutional Neural Networks (15/11)

论文地址

核心思想:CNN主要用于解决困难的图像驱动模式识别任务,其精确而简单的架构提供了一种简化的ANN入门方法。本文简要介绍了CNN,讨论了最近发表的论文和开发这些出色的图像识别模型的新技术。

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来源 Model The World!